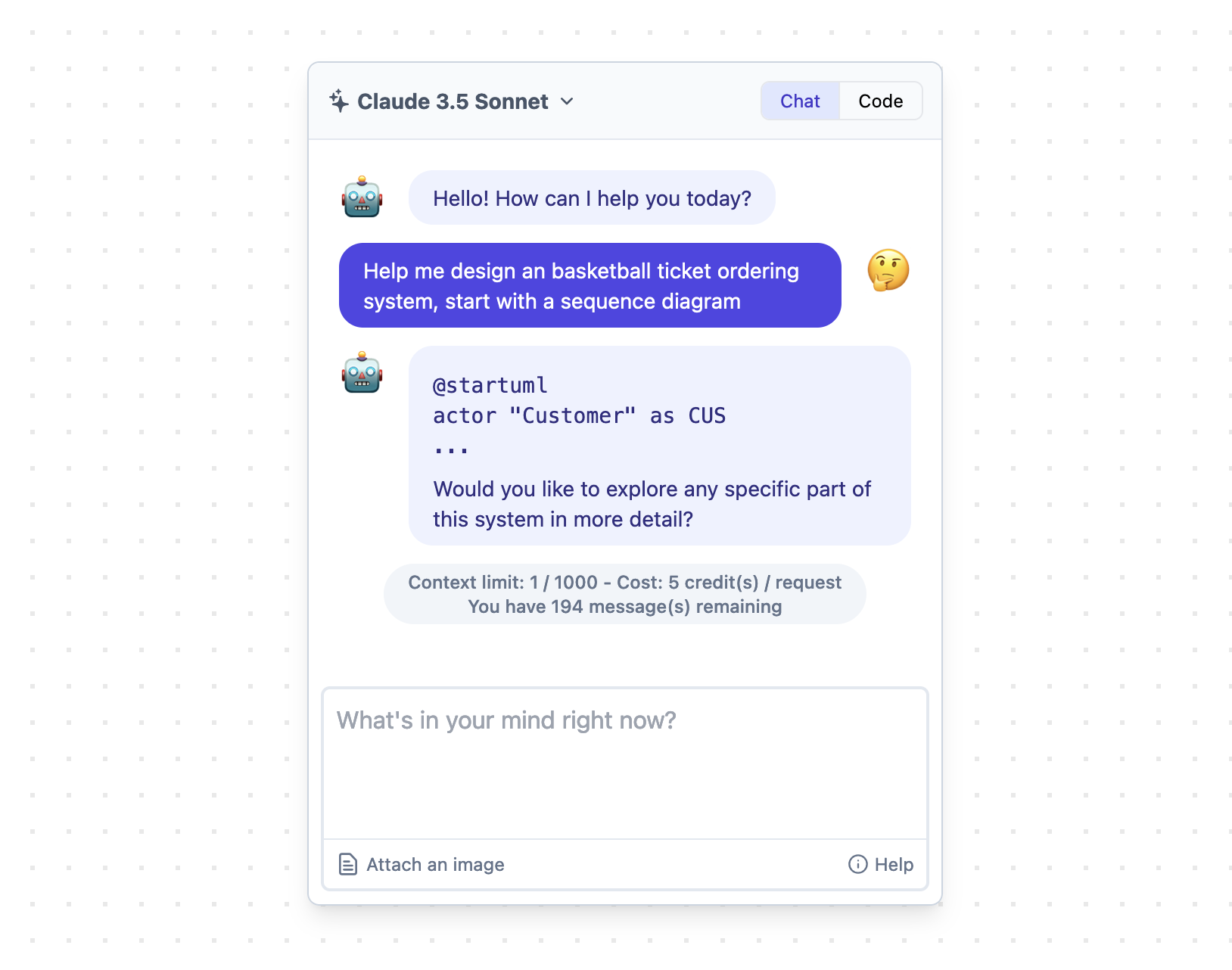

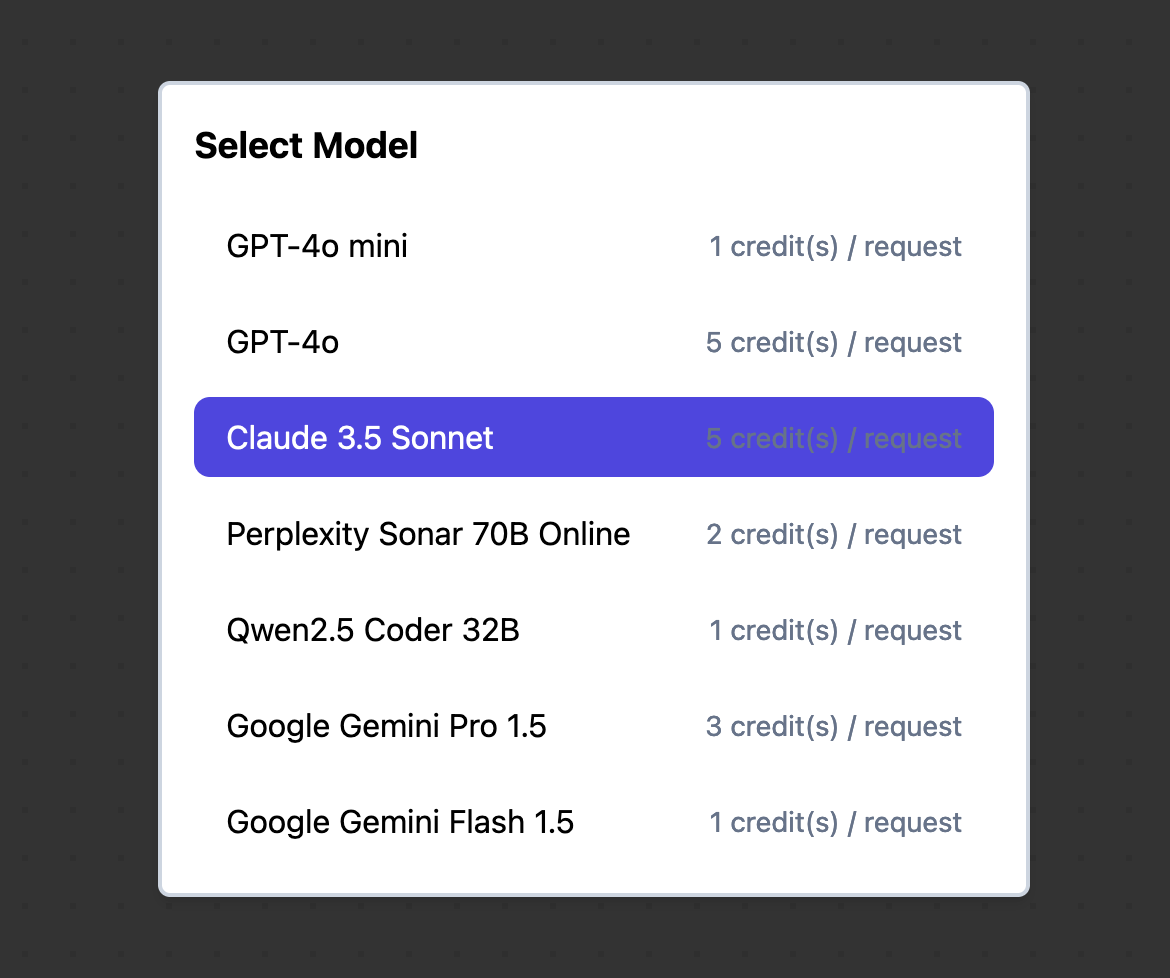

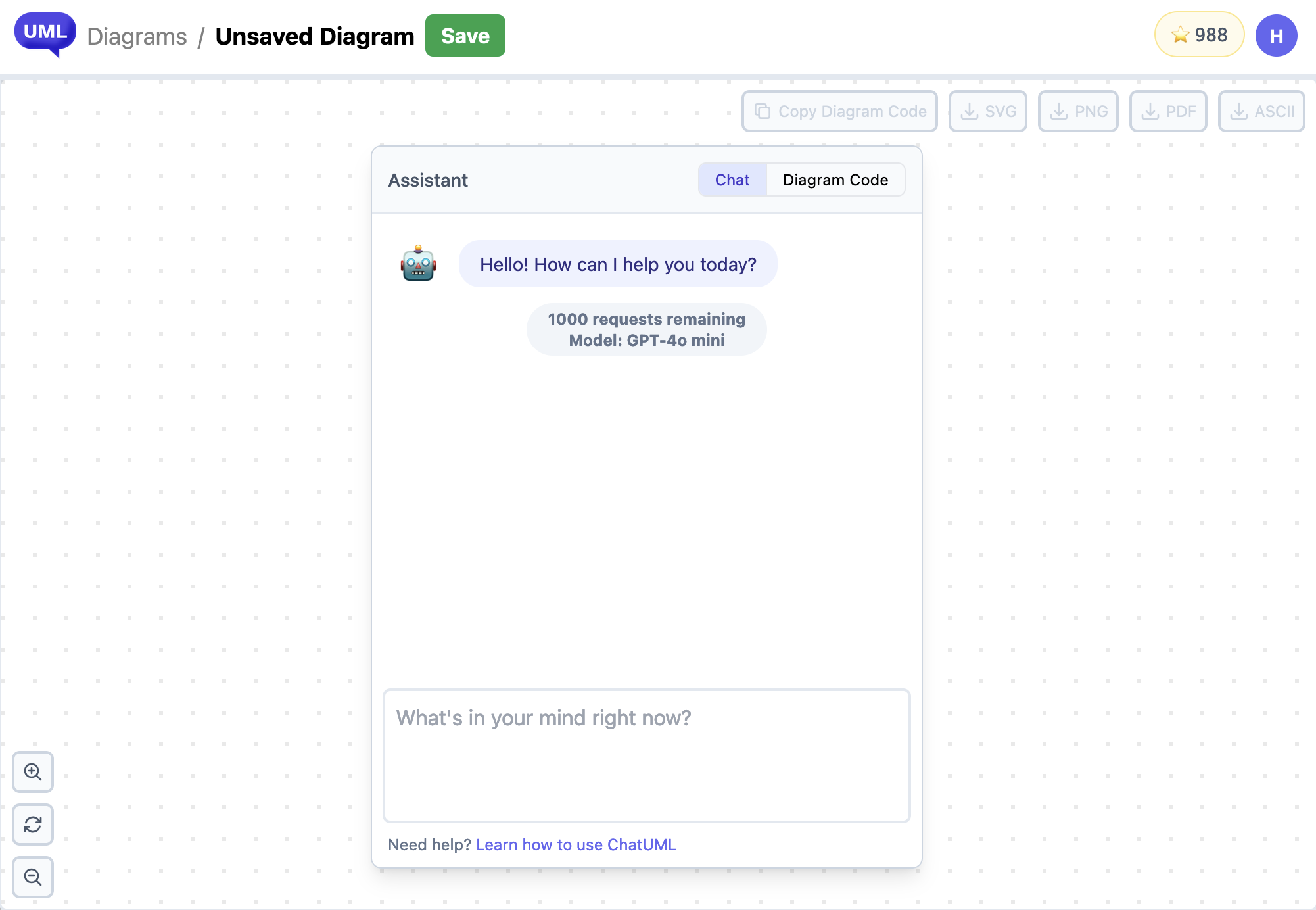

Building a new application can be daunting, especially when it comes to designing the architecture. This post explores how to leverage the power of an AI diagram generator, like ChatUML, to brainstorm, visualize, and refine your system design. We'll use the example of building a "Chat to Document" AI program to illustrate the process.

Let's say we want to build an AI-powered application that allows users to convert chat conversations into structured documents. Where do we start? ChatUML can help!

Step 1: Clarification is Key

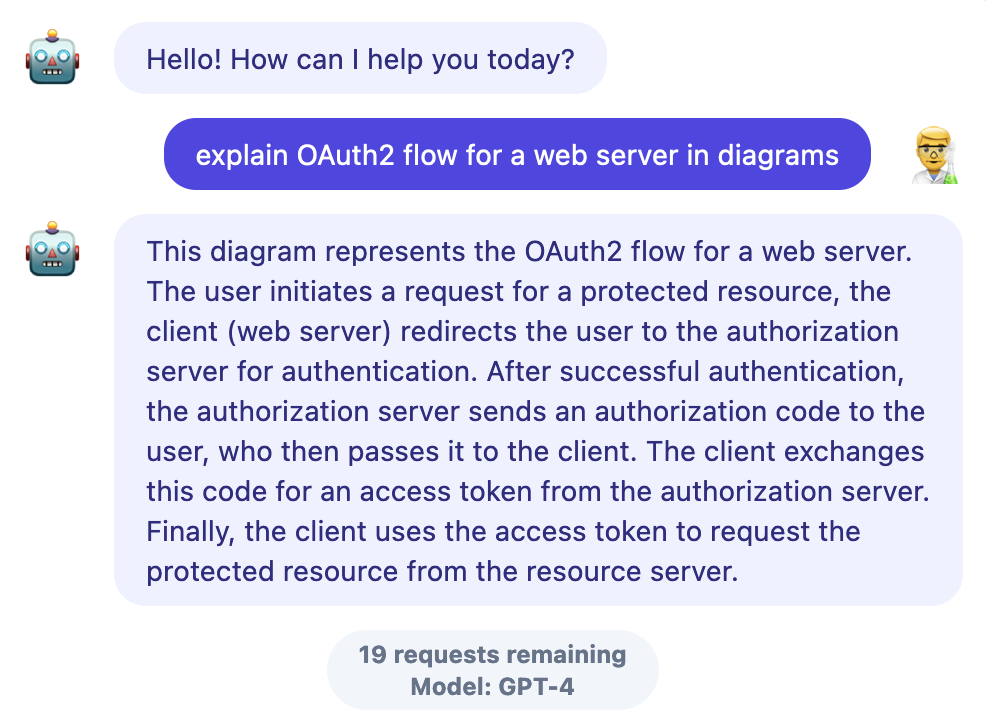

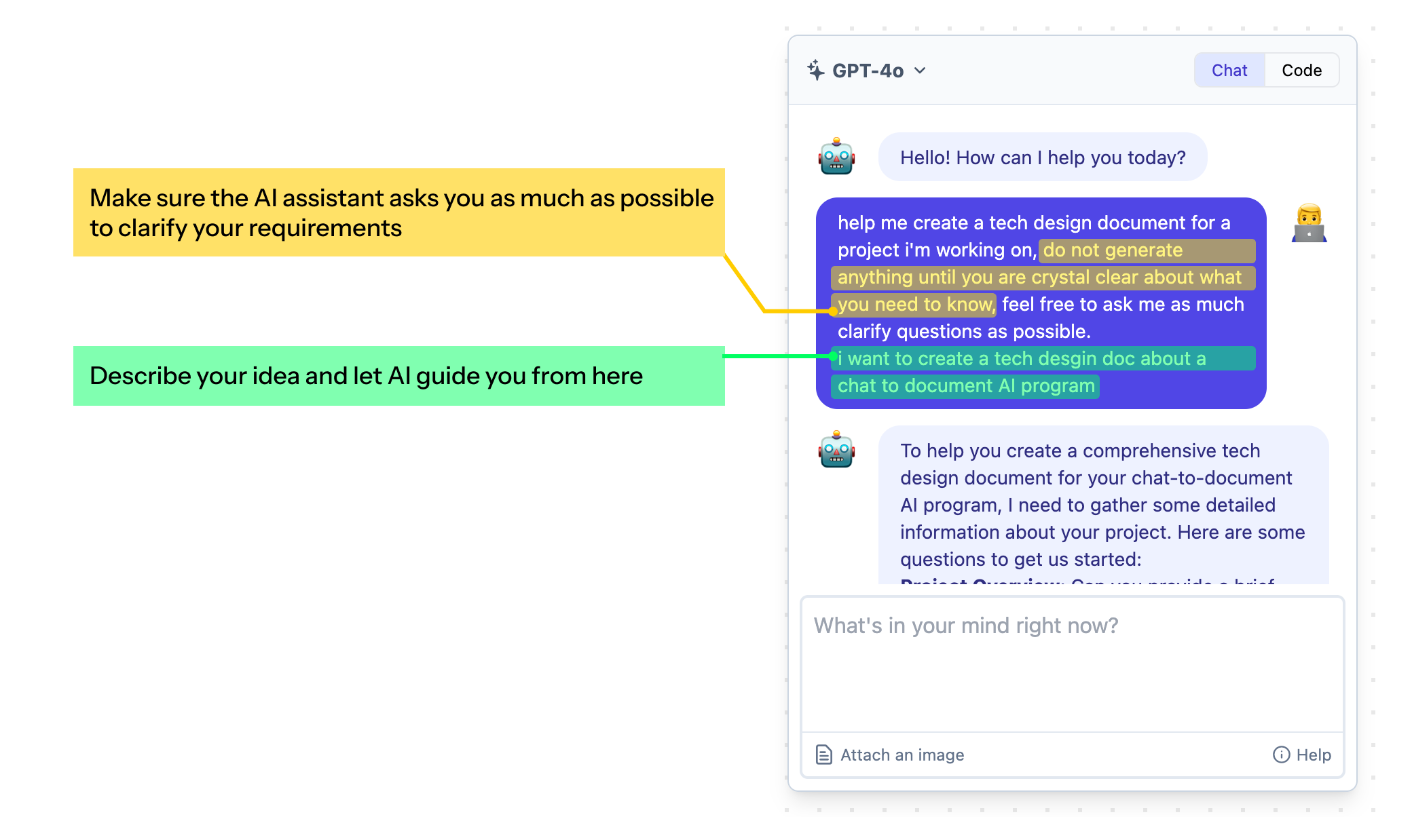

Before diving into diagrams, it's crucial to provide the AI with a clear understanding of your project. Ask the AI assistant to follow up with as much clarifying questions as possible to ensure the it grasps the core functionality and requirements. This initial prompt sets the stage for a successful design process:

Help me create a tech design document for a project i'm working on.

Do not generate anything until you are crystal clear about what you need to know.

Feel free to ask me as much clarify questions as possible.

I want to create a tech desgin doc about a chat to document AI program.

After this, the AI assistant will start asking questions so you can provide information about your project. For example, in this case, I told the AI the following:

- This project is a system that allows users to upload their files. The files will get processed in the backend and stored in a vector DB.

- The technology I'm going to use is: Rust in the backend, React for the frontend, and Postgres with vector support. I didn't need to explicitly tell the AI how all the components interact with each other, as I want it to figure that out for me.

- There is a requirement that the user should not be able to access files that are not theirs.

Step 2: System Overview

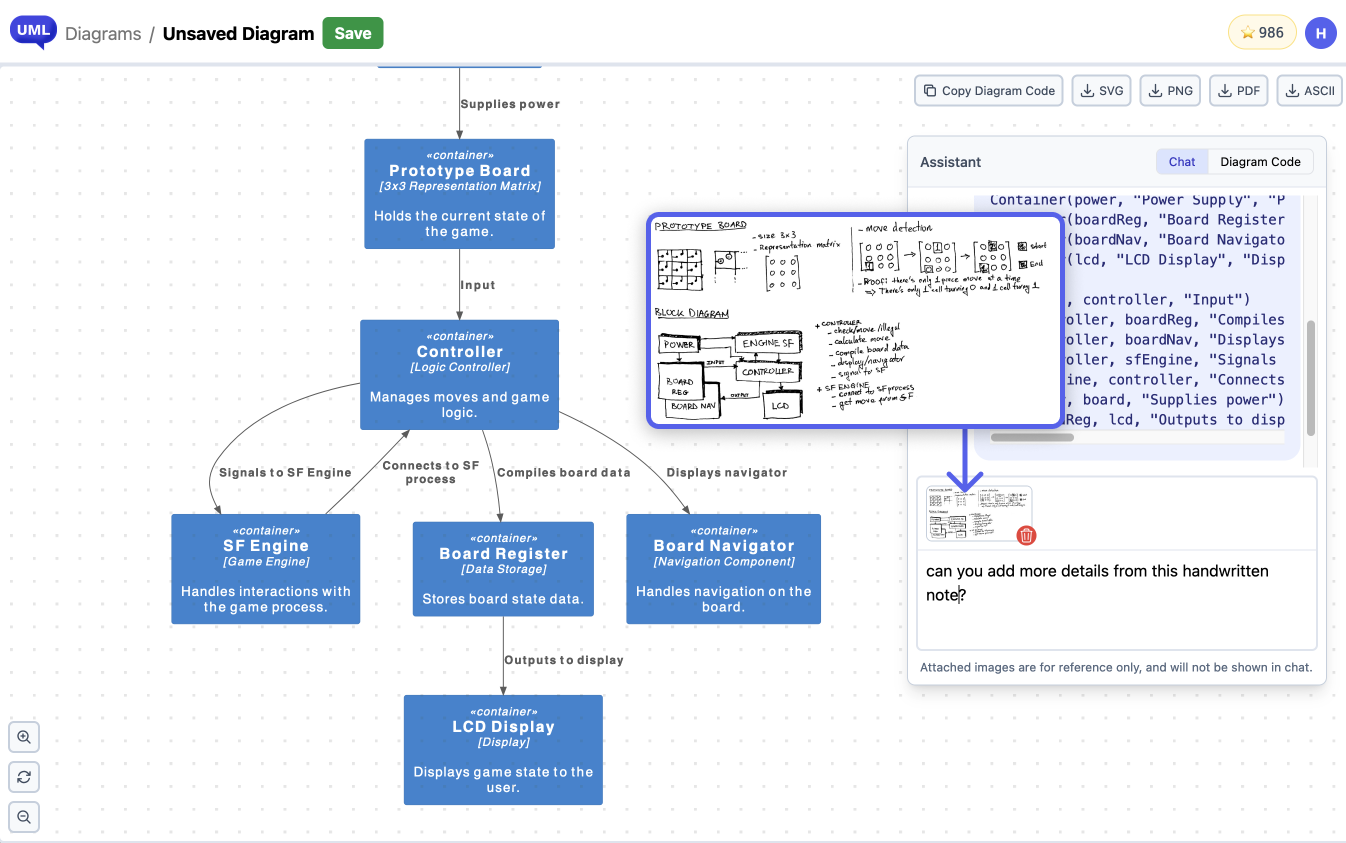

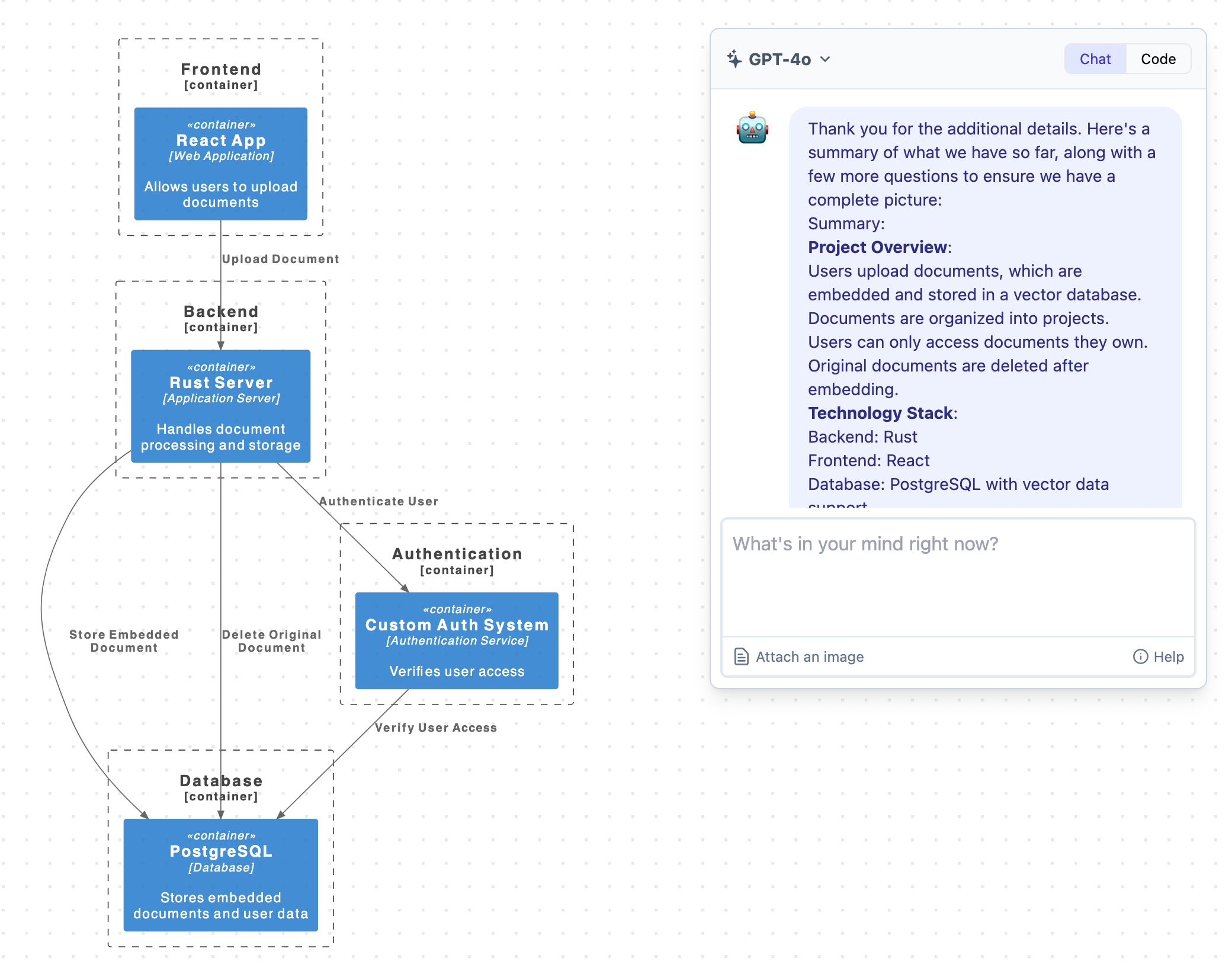

After a few rounds of discussion, the AI gained a pretty clear understanding of our project. We can ask the it to generate a C4 diagram to show the high-level system overview. This diagram provides a bird's-eye view of the major components and their interactions:

- Frontend: The user interface where users upload their documents, sending them to the backend.

- Backend: The core logic that handles document upload, chunking, embeddings, storing them into the database, and also handles chunk searching when answering user's questions.

- Authentication Service: Manages user authentication and authorization.

- Database: Stores user's documents securely.

Step 3: Deep Dive into Components

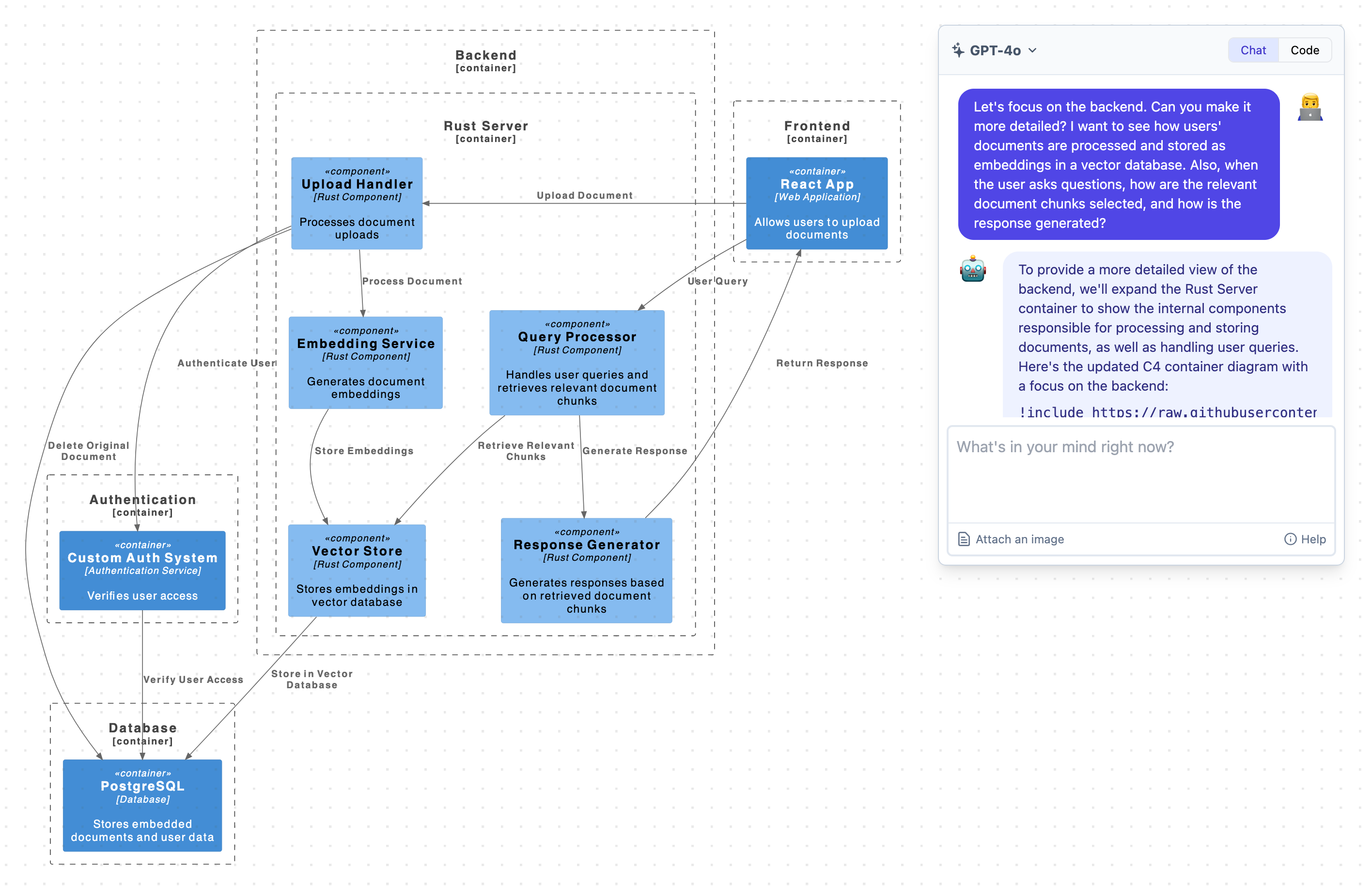

With the overview in place, we can zoom in on specific components for a more detailed understanding. Let's ask the AI to focus on the backend:

Now we can see that, in the backend, there should be a few components that will interact with each other. For example, for the document uploading and storing flow, we have:

- The Upload Service: to handle user's upload requests and send the document to the Embedding Service.

- The Embedding Service: to handle chunking and generate embeddings for each chunk before storing them to the database.

- The Vector Store Service: to handle chunk storing and retrieval from the database when needed.

For conversation, or user's Q&A flow, we have a few more services:

- The Query Processor Service: to handle user's queries, which will call the Vector Store to search for relevant chunks and send the chunks to the Response Generator.

- The Response Generator Service: which will communicate with an external LLM provider to generate the answer and stream it back to the Frontend.

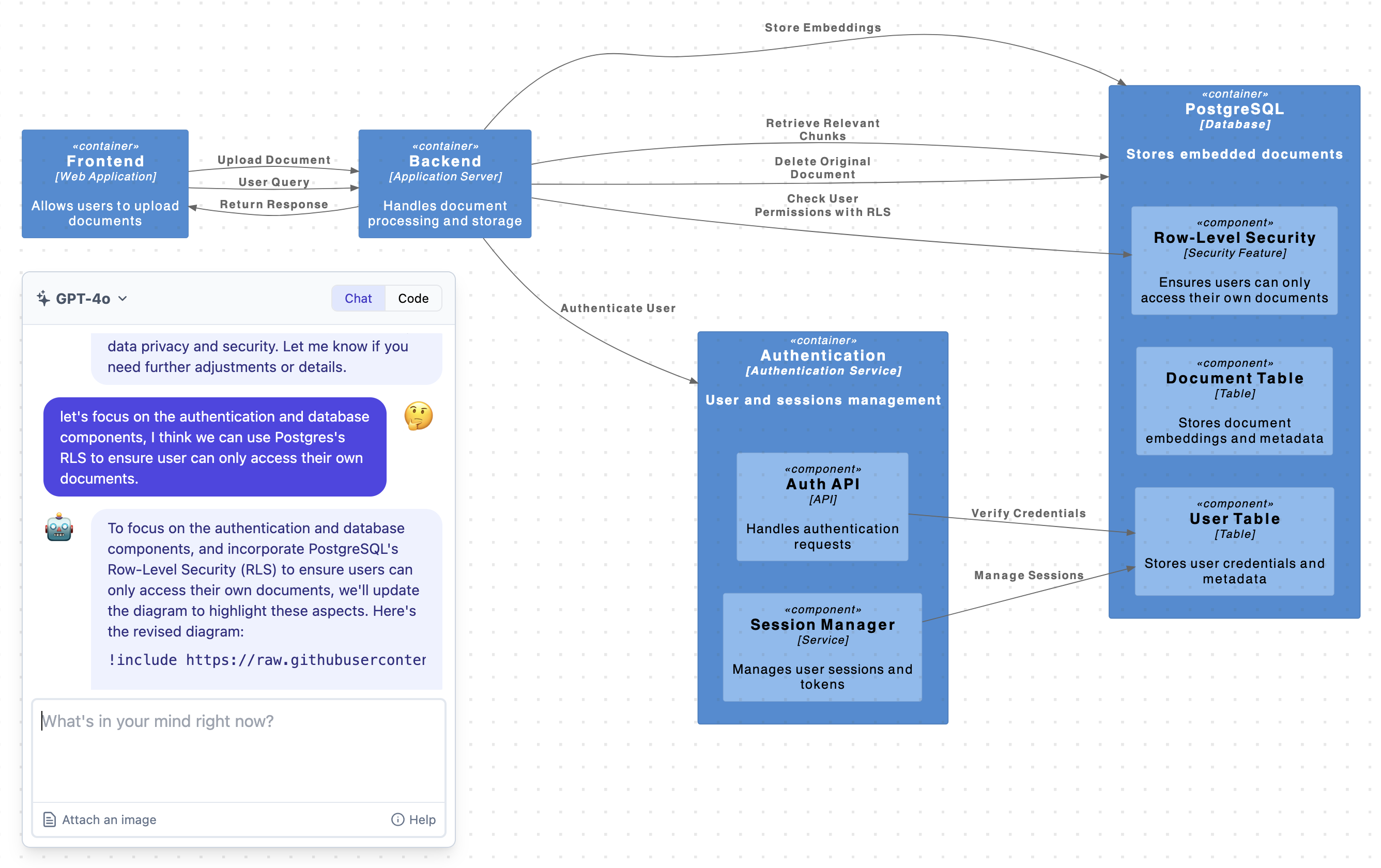

Step 4: Security Considerations

Security is an important aspect for this app, especially when we are dealing with user's private documents. Let's see if ChatUML can generate diagrams that highlight security measures.

Instead of the backend, we will dive into the details of the Authentication service and the Database. Ask the AI assistant to do so:

Interesting, so AI brings up Row Level Security, a PostgreSQL built-in security feature that makes sure a user can only access the database rows that belong to them. Great! We can use this for our application!

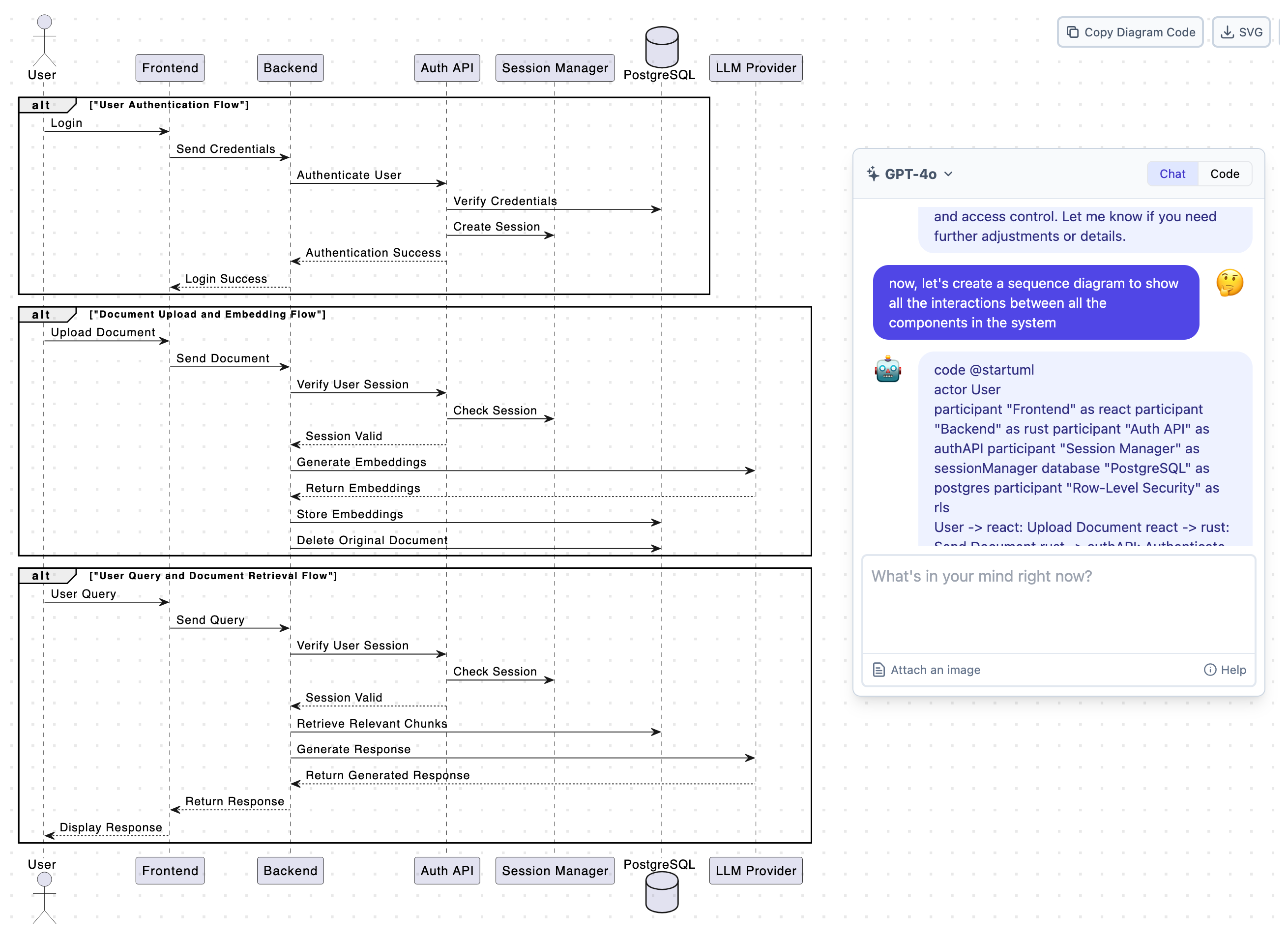

Step 5: Sequence Diagrams for Flow Visualization

At this point, it seems like we have done enough exploring and have a good understanding of the system we are building. Before actually doing the coding, we can use AI to generate sequence diagrams to capture the flow of interactions within the system.

By leveraging the power of ChatUML, we've gone from a vague idea to a well-defined architecture for our "Chat to Document" application. This approach allows for rapid prototyping, efficient collaboration, and a more robust final product.

Now, are you ready to build your next product? Let ChatUML give you a hand, will you? 😁